Skip to content

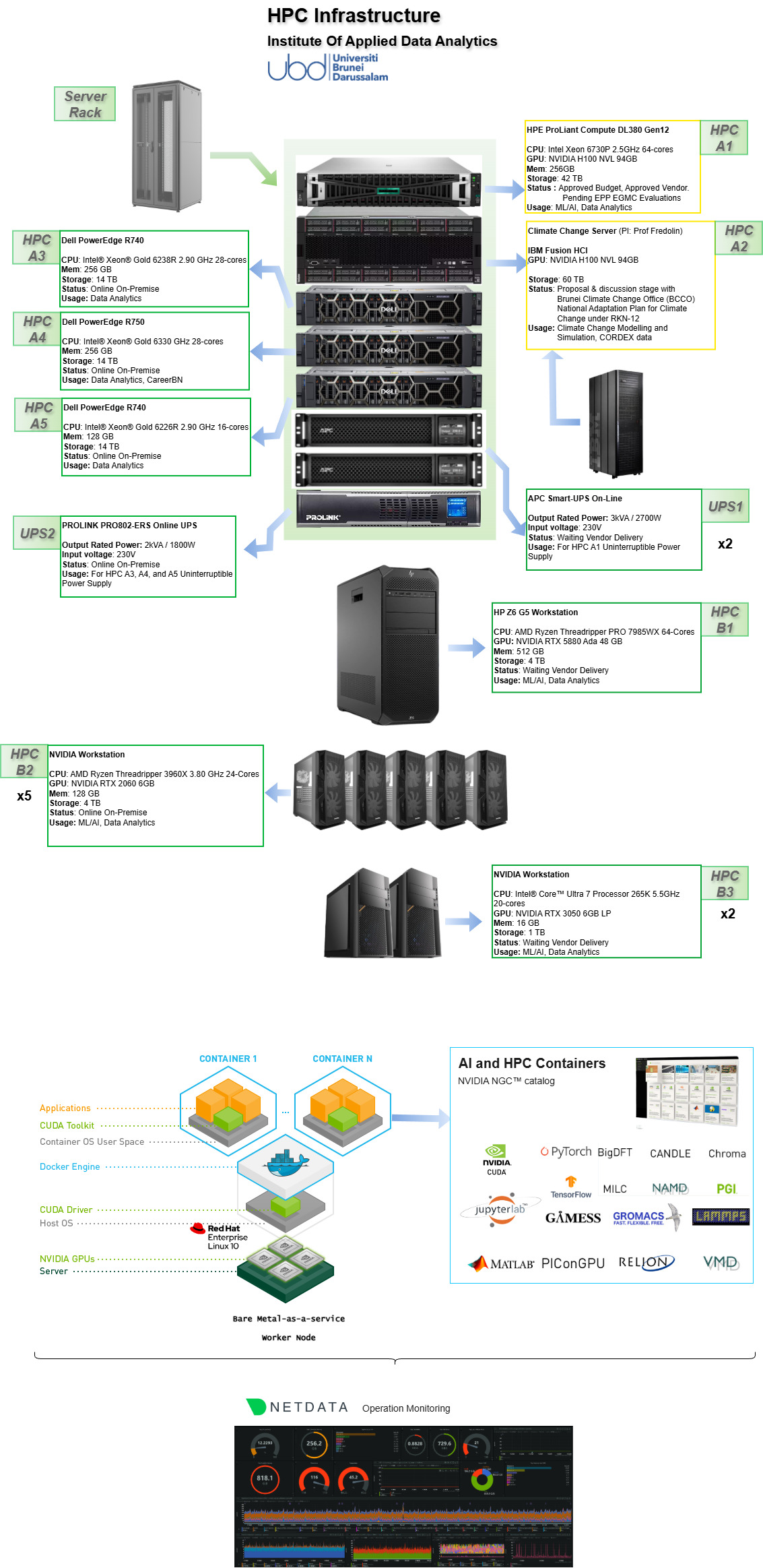

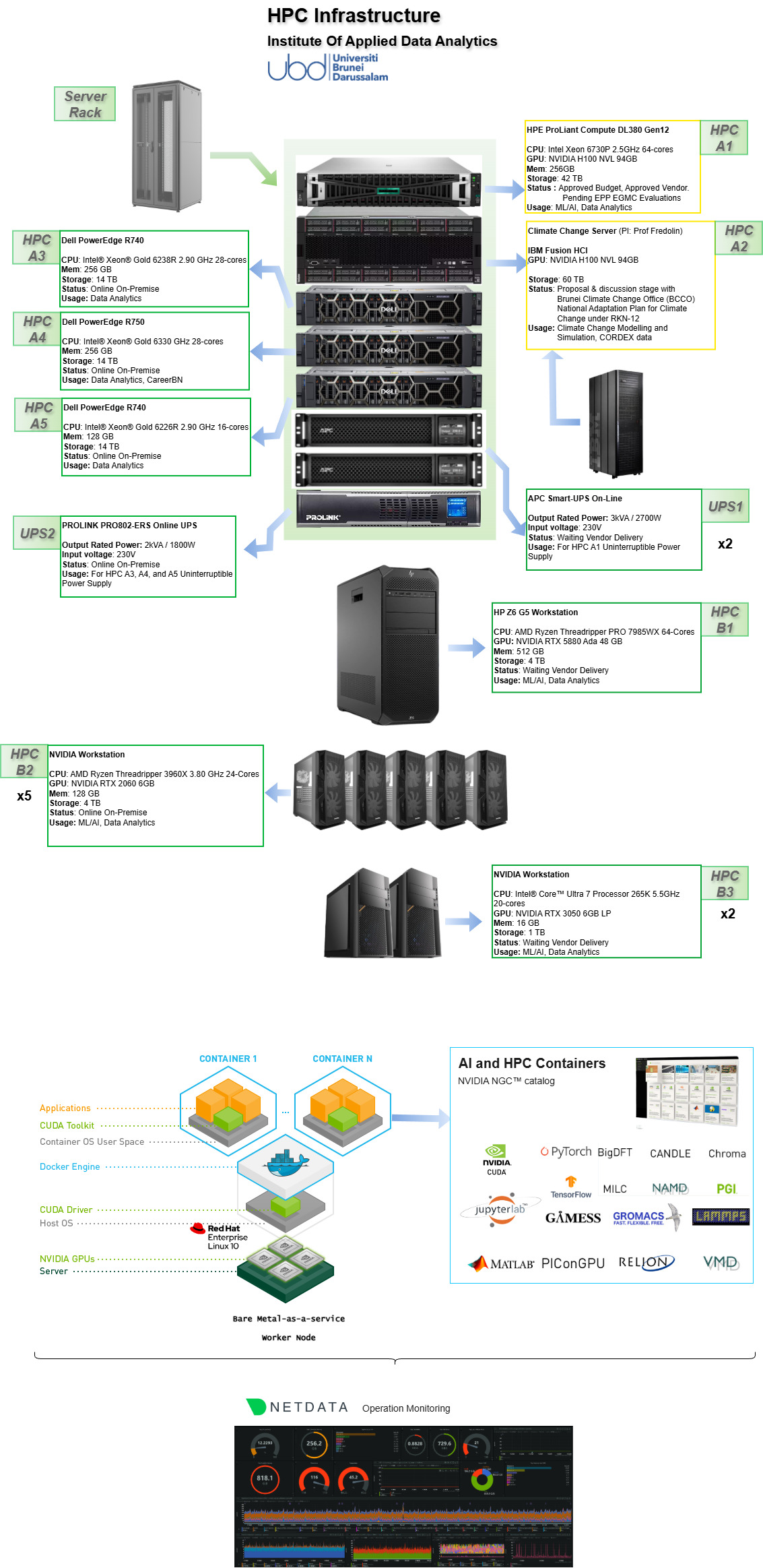

HPE ProLiant Compute DL380 Gen12

- CPU: Intel Xeon 6730P 2.5GHz 64-cores

- GPU: NVIDIA H100 NVL 94GB

- Mem: 256GB

- Storage: 42 TB

- Status: Approved Budget, Approved Vendor Pending EPP EGMC Evaluations

- Usage: ML/AI, Data Analytics

HPC A2

Climate Change Server

IBM Fusion HCI

- GPU: NVIDIA H100 NVL 94GB

- Storage: 60 TB

- Status: Proposal & discussion stage with Brunei Climate Change Office (BCCO) National Adaptation Plan for Climate Change under RKN-12

- Usage: Climate Change Modeling and Simulation, CORDEX data

HPC A3

Dell PowerEdge R740

- CPU: Intel® Xeon® Gold 6238R 2.90 GHz 28-cores

- Mem: 256 GB

- Storage: 14 TB

- Status: Online On-Premise

- Usage: Data Analytics

HPC A4

Dell PowerEdge R750

- CPU: Intel® Xeon® Gold 6330 GHz 28-cores

- Mem: 256 GB

- Storage: 14 TB

- Status: Online On-Premise

- Usage: Data Analytics, CareerBN

HPC A5

Dell PowerEdge R740

- CPU: Intel® Xeon® Gold 6226R 2.90 GHz 18-cores

- Mem: 128 GB

- Storage: 14 TB

- Status; Online On-Premise

- Usage: Data Analytics

HPC B1

HP Z6 G5 Workstation

- CPU: AMD Ryzen Threadripper PRO 7985WX 64-Cores

- GPU: NVIDIA RTX 5880 Ada 48 GB

- Mem: 512 GB

- Storage: 4 TB

- Status: Waiting Vendor Delivery

- Usage: ML/AI, Data Analytics

HPC B2 x5

NVIDIA Workstation

- CPU: AMD Ryzen Threadripper 3960X 3.80GHz 24-Cores

- GPU: NVIDIA RTX 2060 6GB

- Mem: 128 GB

- Storage: 4 TB

- Status: Online On-Premise

- Usage: ML/AI, Data Analytics

HPC B3 x2

NVIDIA Workstation

- CPU: Intel® Core™ Ultra 7 Processor 265K 5.5GHz 20-Cores

- GPU: NVIDIA RTX 3050 6GB LP

- Mem: 16 GB

- Storage: 1 TB

- Status: Waiting Vendor Delivery

- Usage; ML/AI, Data Analytics

UPS1 x2

APC Smart-UPS On-Line

- Output Rated Power: 3kVA / 2700W

- Input voltage: 230V

- Status: Waiting Vendor Delivery

- Usage: For HPC A1 Uninterruptible Power Supply

UPS2

PROLINK PRO802-ERS Online UPS

- Output Rated Power: 2kVA / 1800W

- Input voltage: 230V

- Status: Online On-Premise

- Usage: For HPC A3, A4, and A5 Uninterruptible Power Supply